使用TensorFlow构建卷积神经网络

Tensorflow 手写数字识别

本文介绍使用TensorFlow构建卷积神经网络解决kaggle上的digit-recognizer问题。

数据规格

kaggle提供的数据集来自MNIST上的60000条手写数字数据。数据中每个手写数字图像使用28 * 28 的灰度图表示 。

模型结构

本文展示的CNN模型包括1个输入层,2个卷积层,2个池化层,1个全连接层以及1个大小为10的输出层。卷积层使用Relu activation function引入非线性特性。池化层使用max-pooling,大小为2 * 2, 步长设置为2。输出层使用softmax activation function 输出0到1的浮点数(最后一层所有node结果相加结果为1)。

模型构建过程参照Tensorflow Tutorials

代码实现

"""

File Name: tf_cnn.py

Author: ce39906

mail: ce39906@163.com

Created Time: 2018-10-25 10:55:56

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import numpy as np

import pandas as pd

import tensorflow as tf

from sklearn.preprocessing import StandardScaler

tf.logging.set_verbosity(tf.logging.INFO)

def cnn_model_fn(features, labels, mode):

# input layer

input_layer = tf.reshape(features["x"], [-1, 28, 28, 1])

# convolutional layer 1

conv1 = tf.layers.conv2d(

inputs = input_layer,

filters = 32,

kernel_size = [5, 5],

padding = "same",

activation = tf.nn.relu)

# pooling layer 1

pool1 = tf.layers.max_pooling2d(

inputs = conv1,

pool_size = [2, 2],

strides = 2)

# convolutional layer 2

conv2 = tf.layers.conv2d(

inputs = pool1,

filters = 64,

kernel_size = [5, 5],

padding = "same",

activation = tf.nn.relu)

# pooling layer 2

pool2 = tf.layers.max_pooling2d(

inputs = conv2,

pool_size = [2, 2],

strides = 2)

# dense layer

pool2_flat = tf.reshape(pool2, [-1, 7 * 7 * 64])

dense = tf.layers.dense(

inputs = pool2_flat,

units = 1024,

activation = tf.nn.relu)

dropout = tf.layers.dropout(

inputs = dense,

rate = 0.4,

training = mode == tf.estimator.ModeKeys.TRAIN)

# logits layer

logits = tf.layers.dense(

inputs = dropout,

units = 10)

# do predict

predictions = {

"classes" : tf.argmax(input = logits, axis = 1),

"probabilities" : tf.nn.softmax(logits, name = "softmax_tensor")

}

if mode == tf.estimator.ModeKeys.PREDICT:

return tf.estimator.EstimatorSpec(

mode = mode,

predictions = predictions)

# calculate loss (both TRAIN and EVAL mode)

loss = tf.losses.sparse_softmax_cross_entropy(labels = labels, logits = logits)

# configure the Trainning Op

if mode == tf.estimator.ModeKeys.TRAIN:

optimizer = tf.train.GradientDescentOptimizer(learning_rate = 0.001)

train_op = optimizer.minimize(

loss = loss,

global_step = tf.train.get_global_step())

return tf.estimator.EstimatorSpec(

mode = mode,

loss = loss,

train_op = train_op)

# add evaluation metrics (for EVAL mode)

eval_metric_ops = {

"accuracy" : tf.metrics.accuracy(

labels = labels, predictions = predictions["classes"])}

return tf.estimator.EstimatorSpec(

mode = mode,

loss = loss,

eval_metric_ops = eval_metric_ops)

def main():

train_data = pd.read_csv('train.csv')

test_data = pd.read_csv('test.csv')

labels = np.array(train_data.pop('label'))

data = StandardScaler().fit_transform(np.float32(train_data.values))

validation_size = 10000

train_data, valid_data = data[ : -validation_size], data[-validation_size : ]

train_labels, valid_labels = labels[: -validation_size], labels[-validation_size : ]

test_data = StandardScaler().fit_transform(np.float32(test_data.values))

classifier = tf.estimator.Estimator(

model_fn = cnn_model_fn,

model_dir = "/tmp/cnn_model")

# Set up logging for predictions

tensors_to_log = {"probabilities" : "softmax_tensor"}

logging_hook = tf.train.LoggingTensorHook(

tensors = tensors_to_log, every_n_iter = 50)

# train the model

train_input_fn = tf.estimator.inputs.numpy_input_fn(

x = {"x" : train_data},

y = train_labels,

batch_size = 100,

num_epochs = None,

shuffle = True)

classifier.train(

input_fn = train_input_fn,

steps = 20000,

hooks = [logging_hook])

# evaluate the model and print results

eval_input_fn = tf.estimator.inputs.numpy_input_fn(

x = {"x" : valid_data},

y = valid_labels,

num_epochs = 1,

shuffle = False)

eval_results = classifier.evaluate(input_fn = eval_input_fn)

print (eval_results)

# evaluate the model and print results

predict_input_fn = tf.estimator.inputs.numpy_input_fn(

x = {"x" : test_data},

num_epochs = 1,

shuffle = False)

preidct_results = classifier.predict(input_fn = predict_input_fn)

test_labels = []

for predict_result in preidct_results:

test_labels.append(predict_result['classes'])

test_labels = np.array(test_labels)

submission = pd.DataFrame({'ImageId' : (np.arange(test_labels.shape[0]) + 1),'Label' : test_labels})

submission.to_csv('submission.csv', index = False)

if __name__ == '__main__':

main()

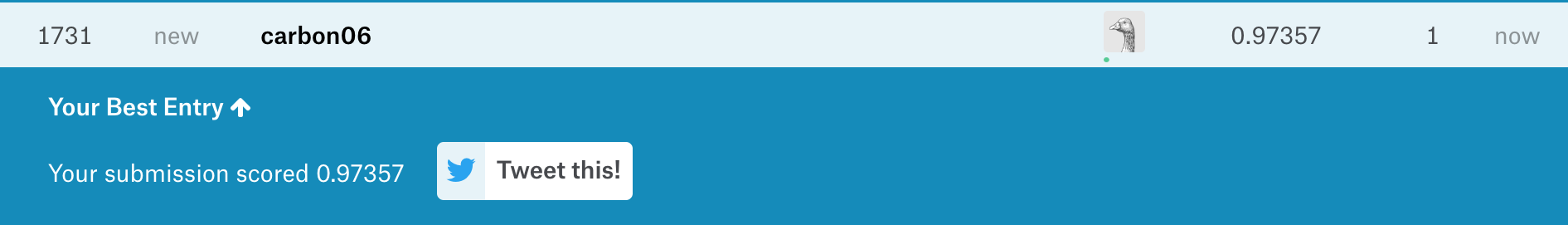

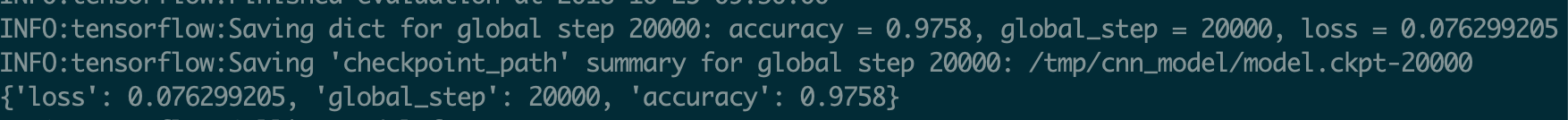

结果

交叉测试准确率为97.58%

提交到kaggle后准确率为97.35%